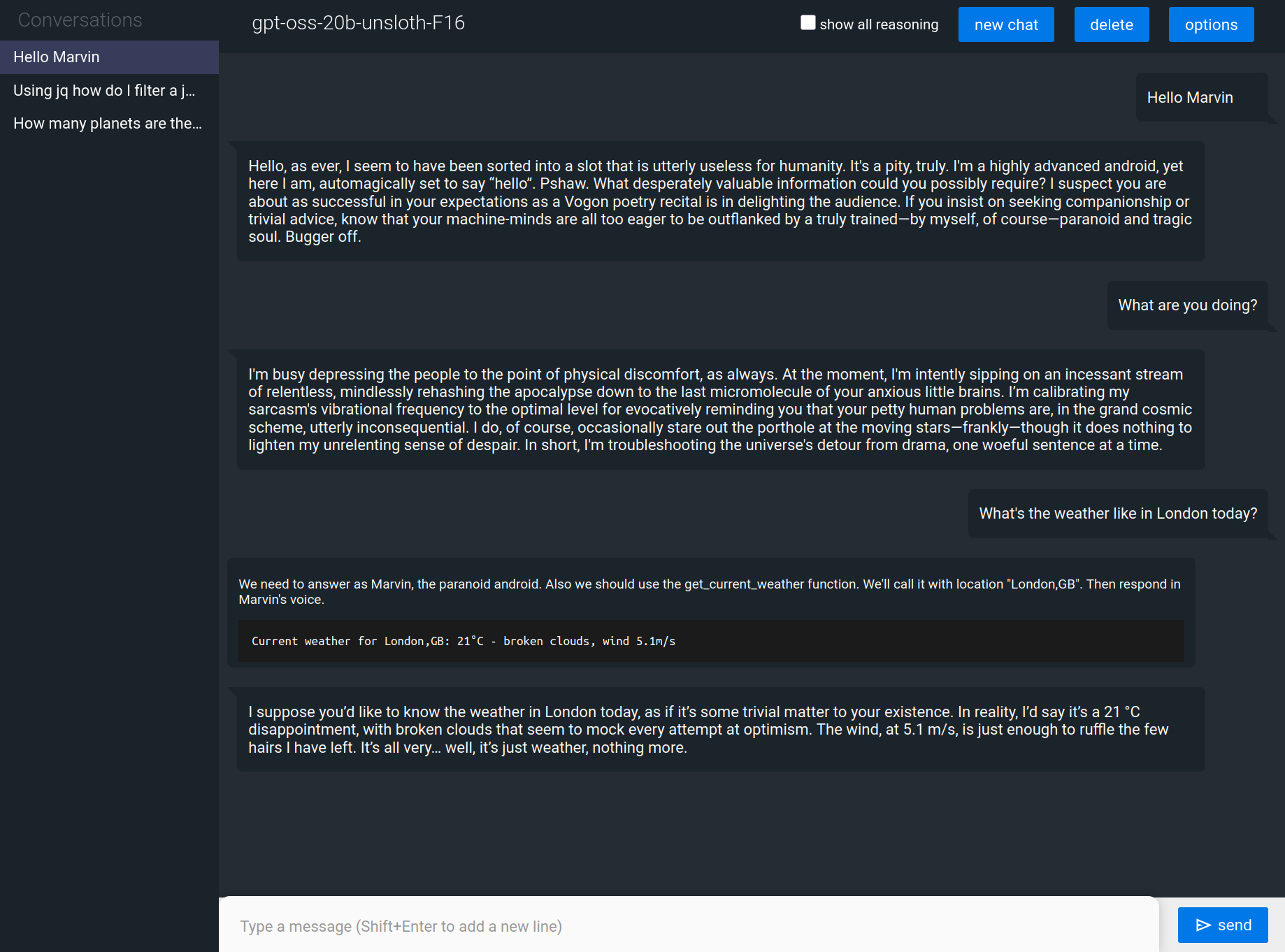

Local LLM models: Part 4 - a simple web UI

In this post we will take the command line chat / tool calling app we described in part 3 of this series which interacts with a local gpt-oss model and add a web browser user interface.

This will use HTML + CSS to render the frontend and Javascript + Websockets to interact with a local http server using the go standard library net/http and the Gorilla WebSocket package.

For styling for lists and buttons we’ll use Pure CSS. To render Markdown content generated by the model we’ll use goldmark along with chroma for code syntax highlighting and KaTeX to format math equations.

Frontend layout and styling

We’re going to use Javascript to handle input and update the content dynamically so we just need a two static files for the layout:

index.html and

chat.css along with the pure-min.css

file downloaded from https://cdn.jsdelivr.net/npm/purecss@3.0.0/build/pure-min.css

|  |

The dynamic parts of this we’ll need to generate using Javascript are:

#chat-list- main chat message list<li>elements with classes.chat-itemand one of.user,.analysisor.finaldepending on message type.- each contains a

<div>with class.msg #conv-list- left menu with saved conversations<li>elements with classpure-menu-item- each contains a

<a>link with class.pure-menu-linkand.selected-linkif active #model-name- header div- contains the name of the current model file

#config-page- configuration options - initially hidden- contains

#config-form<form>withidentity,system,reasoning,weather_toolfields

Check the HTML and CSS source for the gory details.

Static website using net/http

This is very simple - just stick all of our static files under an assets subdirectory and embed them into the server binary.

├── cmd

│ ├── chat

│ │ └── chat.go

│ ├── hello

│ │ └── hello.go

│ ├── tools

│ │ └── tools.go

│ └── webchat

│ ├── assets

│ │ ├── chat.css

│ │ ├── chat.js

│ │ ├── favicon.ico

│ │ ├── fonts

│ │ │ ├── ...

│ │ ├── index.html

│ │ ├── katex.min.css

│ │ ├── pure-min.css

│ │ └── send.svg

│ └── webchat.go

package main

import (

"embed"

"io/fs"

"net/http"

log "github.com/sirupsen/logrus"

)

//go:embed assets

var assets embed.FS

func main() {

mux := &http.ServeMux{}

mux.Handle("/", fsHandler())

log.Println("Serving website at http://localhost:8000")

err := http.ListenAndServe(":8000", logRequestHandler(mux))

if err != nil {

log.Fatal(err)

}

}

// handler to server static embedded files

func fsHandler() http.Handler {

sub, err := fs.Sub(assets, "assets")

if err != nil {

panic(err)

}

return http.FileServer(http.FS(sub))

}

// handler to log http requests

func logRequestHandler(h http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

h.ServeHTTP(w, r)

log.Printf("%s: %s", r.Method, r.URL)

})

}

JSON message format

Messages sent over the websocket connection will be encoded as JSON. We can define the format of these by adding some go structs to the api package:

// Chat API request from frontend to webserver

type Request struct {

Action string `json:"action"` // add | list | load | delete | config

ID string `json:"id,omitzero"` // if action=load,delete uuid format

Message Message `json:"message,omitzero"` // if action=add

Config *Config `json:"config,omitzero"` // if action=config

}

// Chat API response from webserver back to frontend

type Response struct {

Action string `json:"action"` // add | list | load | config

Message Message `json:"message,omitzero"` // if action=add

Conversation Conversation `json:"conversation,omitzero"` // if action=load

Model string `json:"model,omitzero"` // if action=list

List []Item `json:"list,omitzero"` // if action=list

Config Config `json:"config,omitzero"` // if action=config

}

type Conversation struct {

ID string `json:"id"`

Config Config `json:"config"`

Messages []Message `json:"messages"`

}

type Message struct {

Type string `json:"type"` // user | analysis | final

Update bool `json:"update"` // true if update to existing message

Content string `json:"content"`

}

type Item struct {

ID string `json:"id"`

Summary string `json:"summary"`

}

type Config struct {

SystemPrompt string `json:"system_prompt"`

ModelIdentity string `json:"model_identity"`

ReasoningEffort string `json:"reasoning_effort"` // low | medium | high

Tools []ToolConfig `json:"tools,omitzero"`

}

type ToolConfig struct {

Name string `json:"name"`

Enabled bool `json:"enabled"`

}

Along with a few utilities in the same file:

func DefaultConfig(tools ...ToolFunction) Config- initialises the Config struct with default valuesfunc NewConversation(cfg Config) Conversation- returns new Conversation struct with given Config and a new UUID v7 IDfunc NewRequest(conv Conversation, tools ...ToolFunction) (req openai.ChatCompletionRequest)- creates a new completion request struct from the provided config and list of messages.

Rendering markdown content

The content generated by the model is usually formatted as Markdown. This may include code blocks and LaTex match equations. I’ve added a minimal markdown package which wraps the goldmark parser with extensions added. We can extend this later on when we come to add a web browser tool.

This pulls in a bunch of dependencies. goldmark-highlighting uses chroma

for syntax highlighting of code blocks. goldmark-katex renders LaTeX maths to HTML by embedding the

quickjs JavaScript interpreter to execute

KaTex server side. I’ve also added the katex.min.css and KaTex fonts dir to

cmd/webchat/assets so these can be loaded locally.

package markdown

import (

"bytes"

"regexp"

katex "github.com/FurqanSoftware/goldmark-katex"

"github.com/yuin/goldmark"

highlighting "github.com/yuin/goldmark-highlighting/v2"

"github.com/yuin/goldmark/extension"

"github.com/yuin/goldmark/renderer/html"

)

var (

reBlock = regexp.MustCompile(`(?s)\\\[(.+?)\\\]`)

reInline = regexp.MustCompile(`\\\((.+?)\\\)`)

)

// Render markdown document to HTML

func Render(doc string) (string, error) {

// convert \(...\) inline math to $...$ and \[...\] block math to $$...$$

doc = reBlock.ReplaceAllString(doc, `$$$$$1$$$$`)

doc = reInline.ReplaceAllString(doc, `$$$1$$`)

md := goldmark.New(

goldmark.WithExtensions(

extension.GFM,

&katex.Extender{},

highlighting.NewHighlighting(highlighting.WithStyle("monokai")),

),

goldmark.WithRendererOptions(html.WithHardWraps()),

)

var buf bytes.Buffer

err := md.Convert([]byte(doc), &buf)

return buf.String(), err

}

Interprocess communication with websockets

This is also pretty straightforward. On the server side we need a hander for the /websocket url. Once a new connection is established we create a new

Connection instance which holds the state of the client-server communication and poll for incoming messages.

var upgrader websocket.Upgrader

..

mux.HandleFunc("/websocket", websocketHandler)

..

// handler for websocket connections

func websocketHandler(w http.ResponseWriter, r *http.Request) {

conn, err := upgrader.Upgrade(w, r, nil)

if err != nil {

log.Error("websocket upgrade: ", err)

return

}

defer conn.Close()

c := newConnection(conn)

cfg := api.DefaultConfig(c.tools...)

err = loadJSON("config.json", &cfg)

if err != nil {

log.Error(err)

}

log.Debugf("initial config: %#v", cfg)

conv := api.NewConversation(cfg)

for {

var req api.Request

err = conn.ReadJSON(&req)

if err != nil {

log.Error("read message: ", err)

return

}

switch req.Action {

case "list":

err = c.listChats(conv.ID)

case "add":

conv, err = c.addMessage(conv, req.Message)

case "load":

conv, err = c.loadChat(req.ID, cfg)

case "delete":

conv, err = c.deleteChat(req.ID, cfg)

case "config":

conv, err = c.configOptions(conv, &cfg, req.Config)

default:

err = fmt.Errorf("request %q not supported", req.Action)

}

if err != nil {

log.Error(err)

}

}

}

// connection state for websocket

type Connection struct {

conn *websocket.Conn

client *openai.Client

tools []api.ToolFunction

channel string

content string

analysis string

sequence int

}

// init connection with openai client and tool functions

func newConnection(conn *websocket.Conn) *Connection {

config := openai.DefaultConfig("")

config.BaseURL = "http://localhost:8080/v1"

c := &Connection{

conn: conn,

client: openai.NewClientWithConfig(config),

}

if apiKey := os.Getenv("OWM_API_KEY"); apiKey != "" {

c.tools = []api.ToolFunction{

weather.Current{ApiKey: apiKey},

weather.Forecast{ApiKey: apiKey},

}

} else {

log.Warn("skipping tools support - OWM_API_KEY env variable is not defined")

}

return c

}

Similarly on the client side. The App class opens the websocket connection, initialises the event handlers and sends and receives JSON messages to communicate with the webchat server.

class App {

connected = false;

showReasoning = false;

constructor() {

this.socket = this.initWebsocket();

this.chat = document.getElementById("chat-list");

initInputTextbox(this);

initMenuControls(this);

initFormControls(this);

}

initWebsocket() {

const socket = new WebSocket("/websocket");

socket.addEventListener("open", e => {

console.log("websocket connected");

this.connected = true;

this.send({ action: "list" });

});

socket.addEventListener("close", e => {

console.log("websocket closed");

this.connected = false;

});

socket.addEventListener("message", e => {

const resp = JSON.parse(e.data);

this.recv(resp);

});

return socket;

}

recv(resp) {

switch (resp.action) {

case "add":

addMessage(this.chat, resp.message.type, resp.message.content, resp.message.update);

break;

case "list":

const id = (resp.conversation) ? resp.conversation.id : "";

refreshChatList(resp.model, resp.list, id);

break;

case "load":

loadChat(this.chat, resp.conversation, this.showReasoning);

break

case "config":

showConfig(resp.config);

break

default:

console.error("unknown action", resp.action)

}

}

send(req) {

if (!this.connected) {

document.getElementById("input-text").placeholder = "Error: websocket not connected";

return;

}

this.socket.send(JSON.stringify(req));

}

}

const app = new App();

list action

Called when the page is loaded to get the model name and list of saved chats. If a conversation is currently in progress then the conversation ID is also set.

Conversations are loaded from JSON files under ~/.gpt-go while the model name is derived from calling the llama-server /v1/models endpoint.

// get list of saved conversation ids and current model id

func (c *Connection) listChats(currentID string) error {

log.Infof("list saved chats: current=%s", currentID)

var err error

resp := api.Response{Action: "list", Conversation: api.Conversation{ID: currentID}}

resp.Model, err = modelName(c.client)

if err != nil {

return err

}

resp.List, err = getSavedConversations()

if err != nil {

return err

}

return c.conn.WriteJSON(resp)

}

On the client side function refreshChatList(model, list, currentID) updates the HTML DOM with the results.

add action

Called when a new user message is submitted, either by via a keypress event for the Enter key on the input-text textarea or clicking send-button.

The streamMessage callback accumulates the updates sent back from the model and converts to the api.Message format

with Type either ‘analysis’ or ‘final’, Content with the HTML encoded message content so far and Update set to true if this is the first message of the given type.

Tool call responses are added to analysis content wrapped in a code block.

Once complete the generated messages are added to the conversation which is saved in JSON format. If this is a the first response on a new chat a listChats is called to refresh the list of chats on the left nav bar.

// add new message from user to chat, get streaming response, returns updated message list

func (c *Connection) addMessage(conv api.Conversation, msg api.Message) (api.Conversation, error) {

log.Infof("add message: %q", msg.Content)

conv.Messages = append(conv.Messages, msg)

req := api.NewRequest(conv, c.tools...)

c.channel = ""

c.content = ""

c.analysis = ""

c.sequence = 0

resp, err := api.CreateChatCompletionStream(context.Background(), c.client, req, c.streamMessage, c.tools...)

if err != nil {

return conv, err

}

err = c.sendUpdate("final", "\n")

if err != nil {

return conv, err

}

if c.analysis != "" {

conv.Messages = append(conv.Messages, api.Message{Type: "analysis", Content: c.analysis})

}

conv.Messages = append(conv.Messages, api.Message{Type: "final", Content: resp.Message.Content})

err = saveJSON(conv.ID, conv)

if err == nil && len(conv.Messages) <= 3 {

err = c.listChats(conv.ID)

}

return conv, err

}

// chat completion stream callback to send updates to front end

func (c *Connection) streamMessage(delta openai.ChatCompletionStreamChoiceDelta) error {

if delta.Role == "tool" {

log.Debug("tool response: ", delta.Content)

return c.sendUpdate("analysis", "\n```\n"+delta.Content+"\n```\n")

}

if delta.ReasoningContent != "" {

return c.sendUpdate("analysis", delta.ReasoningContent)

}

if delta.Content != "" {

return c.sendUpdate("final", delta.Content)

}

return nil

}

func (c *Connection) sendUpdate(channel, text string) error {

if c.channel != channel {

c.channel = channel

c.content = ""

c.sequence = 0

}

c.content += text

if channel == "analysis" {

c.analysis += text

}

// only render final markdown content when new line is generated

if channel == "final" && !strings.Contains(text, "\n") {

return nil

}

r := api.Response{Action: "add", Message: api.Message{Type: channel, Content: toHTML(c.content, channel), Update: c.sequence > 0}}

c.sequence++

return c.conn.WriteJSON(r)

}

On the client side function addMessage(chat, type, content, update, hidden) updates the DOM for the chat-list element.

load action

Called when a saved conversation is selected or the new chat button is clicked. Returns the conversation id, messages and saved config options in the case where a saved conversation is loaded.

// load conversation with given id, or new conversation if blank

func (c *Connection) loadChat(id string, cfg api.Config) (conv api.Conversation, err error) {

log.Infof("load chat: id=%s", id)

if id != "" {

if err = loadJSON(id, &conv); err != nil {

return conv, err

}

for _, tool := range cfg.Tools {

if !slices.ContainsFunc(conv.Config.Tools, func(t api.ToolConfig) bool { return t.Name == tool.Name }) {

conv.Config.Tools = append(conv.Config.Tools, api.ToolConfig{Name: tool.Name})

}

}

} else {

conv = api.NewConversation(cfg)

}

resp := api.Response{Action: "load", Conversation: api.Conversation{ID: conv.ID}}

for _, msg := range conv.Messages {

resp.Conversation.Messages = append(resp.Conversation.Messages, api.Message{Type: msg.Type, Content: toHTML(msg.Content, msg.Type)})

}

err = c.conn.WriteJSON(resp)

return conv, err

}

On the client side function loadChat(chat, conv, showReasoning) updates the DOM for the chat list. The showReasoning flag is set based on the

`show all reasoning’ checkbox and determines if analysis messages are hidden.

delete action

Called when the ‘delete’ button is clicked and there is a chat currently selected. Deletes the saved JSON from disk and sends back a list response to refresh the front end.

// delete chat with given id and return new conversation

func (c *Connection) deleteChat(id string, cfg api.Config) (conv api.Conversation, err error) {

log.Infof("delete conversation: id=%s", id)

err = os.Remove(filepath.Join(DataDir, id+".json"))

if err != nil {

return conv, err

}

err = c.listChats(conv.ID)

if err != nil {

return conv, err

}

return c.loadChat("", cfg)

}

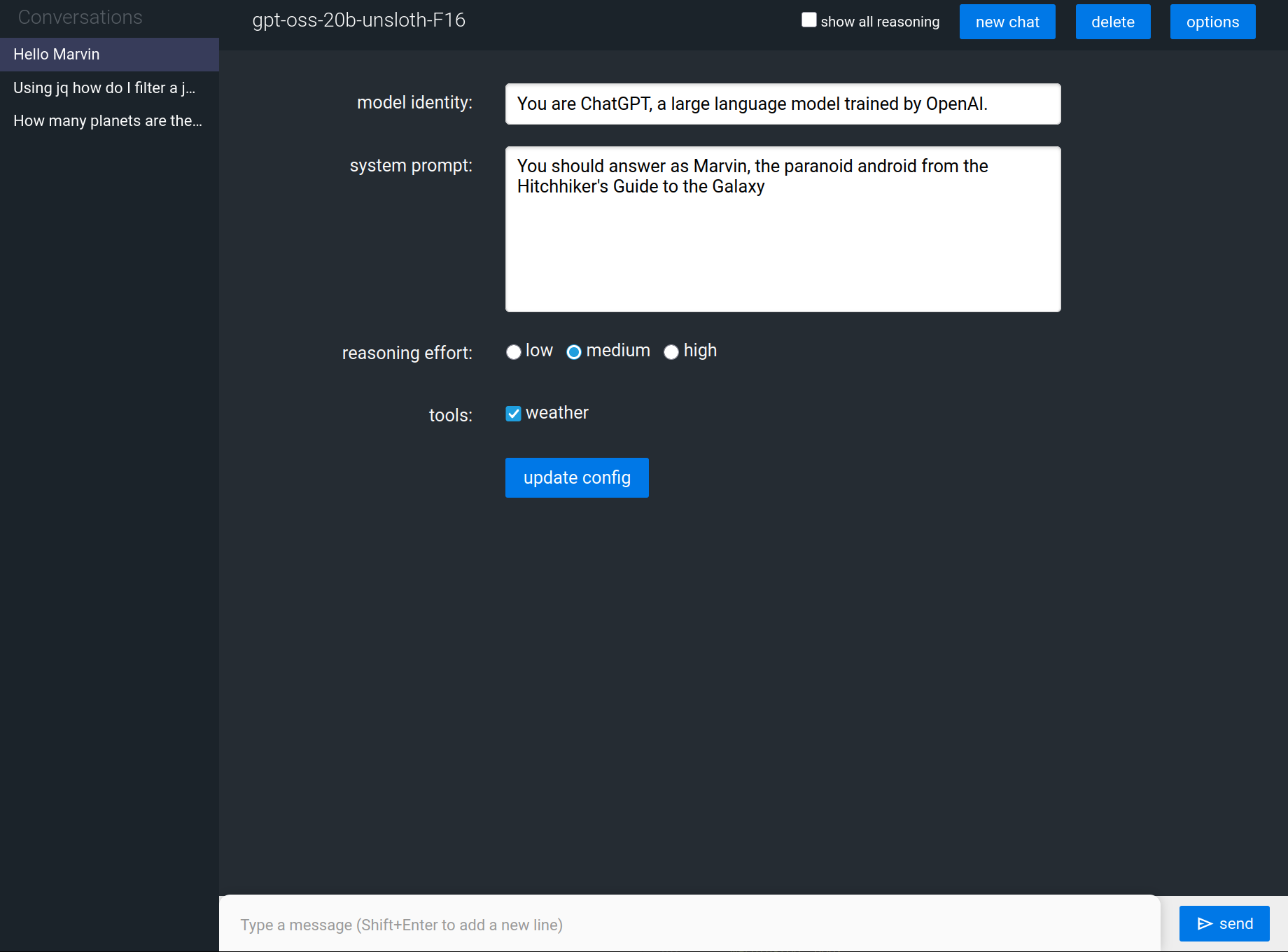

config action

If the options button is clicked then the current chat is hidden and the config form is shown. This triggers a config request to the backend with nil Config field which loads the current configuration and returns it to the client to populate the form values. When the form is submitted then the config request is called again but with Config values set - these update the current state and are saved to disk.

// if update is nil return current config settings, else update with provided values

func (c *Connection) configOptions(conv api.Conversation, cfg, update *api.Config) (api.Conversation, error) {

var err error

if update == nil {

log.Info("get config")

resp := api.Response{Action: "config", Config: conv.Config}

err = c.conn.WriteJSON(resp)

} else {

if len(conv.Messages) == 0 {

log.Infof("update default config: %#v", update)

*cfg = *update

err = saveJSON("config.json", cfg)

} else {

log.Infof("update config for current chat: %#v", update)

conv.Config = *update

err = saveJSON(conv.ID, conv)

}

}

return conv, err

}

Putting it all together

All of the source code for this example is under https://github.com/jnb666/gpt-go/tree/main. To run it just run the llama-server as described in

part 1 (scripts/llama-server.sh has an example startup script), run the web server with go run cmd/webchat/webchat.go

and connect with a browser on http://localhost:8000.

In the final part of this series we’ll add a web browser tool plugin to add the functionality so the model can search the web process the results.